INTRODUCTION

Although a number of methods for the measurement of energy deposition due to penetrating ionising radiation have been developed, the fundamental instrument remains the gas ionisation chamber. In fact the original unit for radiation exposure dose, the Röentgen, was defined in terms of the primary ionisation produced in one cubic centimetre of dry air at standard temperature and pressure; primary ionisation being that produced directly by the incident radiation i.e. not subject to the gas amplification which is employed in ion chamber derivatives, proportional counters and Geiger counters.

Ionisation results from the absorption of energy from incident x ray or gamma photons in any medium and is directly measurable in gases or semiconductors. Current dose/dose-rate units require calibration in terms of energy absorbed in given mass of medium (usually tissue) but the practical method of measurement - in this case ionisation produced in gas within a chamber - remains the same.

The relationship between the practical measurement of exposure dose/dose-rate and the absorbed dose/dose-rate is discussed at the end of this note.

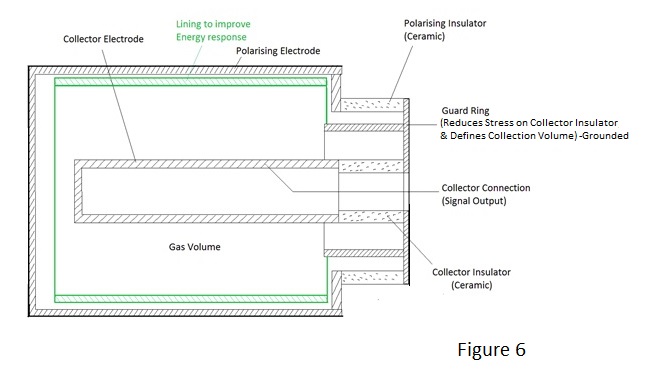

As with many fundamental units, the unit for exposure is defined in a manner which is impractical for everyday use; calling for measurement in free air with no surrounding material to absorb or scatter the incident radiation. Such a free air chamber may be designed for use in a Standards Laboratory but for practical measurement it is necessary to contain the air in a closed chamber which houses the collecting electrodes and in many cases constitutes one of them.

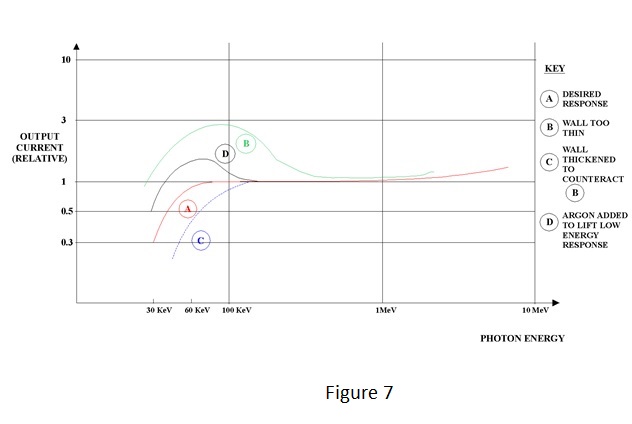

This note attempts to examine in a non-mathematical manner, the advantages and disadvantages of enclosing the gas within the confines of a sealed chamber. Before dealing with the practical ionisation chamber, it is perhaps worthwhile to consider the phenomenon of gas ionisation and how it is caused.

FUNDAMENTALS OF IONISATION

X or g gamma radiation passing through the volume of gas in a chamber loses some of its energy by ionising the gas within.

This means that for some of the gas atoms an electron orbiting the nucleus is ejected; leaving each of these atoms with a positive charge equal to the negative charge of the electron ejected - removal of a negative charge from a neutral body composed of equal numbers of positive and negative charges leaves the body with a net positive charge. These positively charged atoms are called ions and thus the effect of the radiation is to produce pairs of electrons and ions within the gas, normally referred to as ion pairs.

Unlike charges attract so the reader might ask, ‘why don't the pairs recombine immediately’? The reason is that the electrons are emitted with sufficient velocity to remove them from the range of influence of the parent atom, somewhat akin to the concept of escape velocity for space probes. In fact preferential recombination, as this called, does occur under certain circumstances but not to a significant degree with x or gamma radiation. There is, of course, no reason why an electron should not recombine with the ion of a completely different atom. The way that this is avoided will be discussed in due course but firstly, it is desirable to examine other aspects of ionisation.

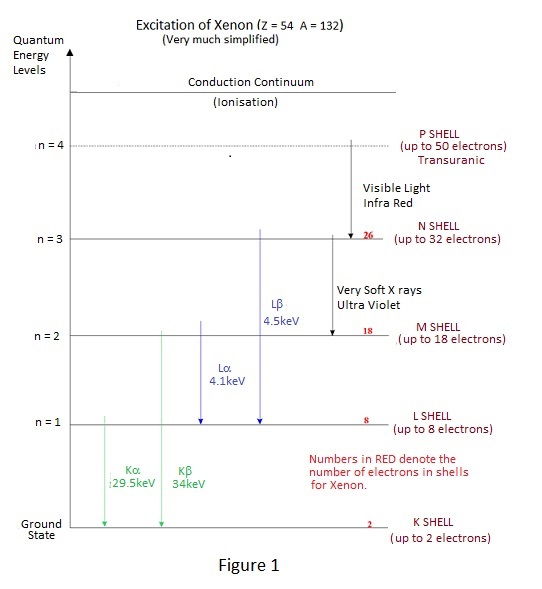

For instance, how much energy is lost by an incident photon in the creation of an ion pair? This quantity is usually symbolised by 'W' - the mean energy per ion pair or mean ionisation potential. W for air is quoted as being in the range 32.5 to 35 electron volts, according to the reference consulted - a degree of uncertainty which is hardly a good basis for calibration. W factors for other common gases are in the range 28 to 42 eV. The interested reader, consulting a reference of physical constants, might be puzzled by a list of ionisation potentials for various gases which are about half of this range or lower. These are the actual ionisation potentials necessary to raise an electron from a tightly bound valence orbit to conduction level. The reason for the difference is that only about half of the interactions of x or gamma rays with atoms result in ionisation. The remainder cause only excitation of the atoms. Excitation means that an electron does not actually escape from the interacting atom but is briefly raised to a higher orbit or energy level before falling back to its original orbit or ground state. Return to the ground state from exitation results in the emission of photons of appropriate energy with highest energies for elecron orbits closer to the nucleus.

An indication of the fall back photon energies due to excitation and ionisation for the noble gas Xenon is given by Figure 1

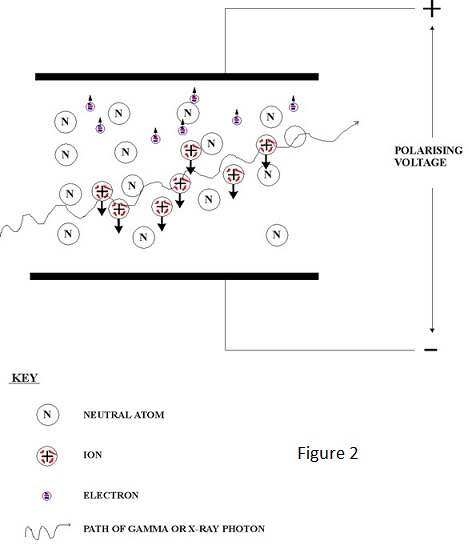

For excitation at the higher enegy levels close to ionisation, fall back is accompanied by the emission of a very soft x ray photon i.e. one of energy slightly less than the actual ionisation energy - typically 15 eV or lower. This gives rise to an interesting possibility:- Consider the situation of a chamber filled with a mixture of two gases, one of which has an ionisation energy lower that the energy of the excitation photon emitted by the other. In these circumstances the excitation photon of the latter is almost certain to ionise the former - the probability of interaction being much greater when the incident and ionisation energy levels are close - and W for some mixed gases is consequently lower than that for either of the individual components. The effect of an x or gamma ray interaction with an atom of one gas causing the ionisation of another gas is not confined to excitation, it also occurs with ionisation, because the ionised atom also emits a soft x ray as it restores electron orbits following ejection of an electron. In fact ionisation chambers often contain mixed gases but, as will be seen in due course, W reduction is not the prime purpose. The presence of ion pairs in a gas means that it is electrically charged and the magnitude of the charge is a measure of the energy deposited in the gas by the incident radiation i.e. the dose delivered. To measure a delivered dose it is necessary to measure the total charge produced which is the product of ionisation current and time and consequently measurement of dose rate is achieved directly by the measurement of the ionisation current. The ionised gas contains essentially static ions and mobile electrons which are only too keen find each other and to recombine. To constitute a flow of ionisation current it is necessary to separate them by an electric field which is created by the application of voltage to collecting electrodes as shown in Figure 2. |

||||||||||

|

||||||||||

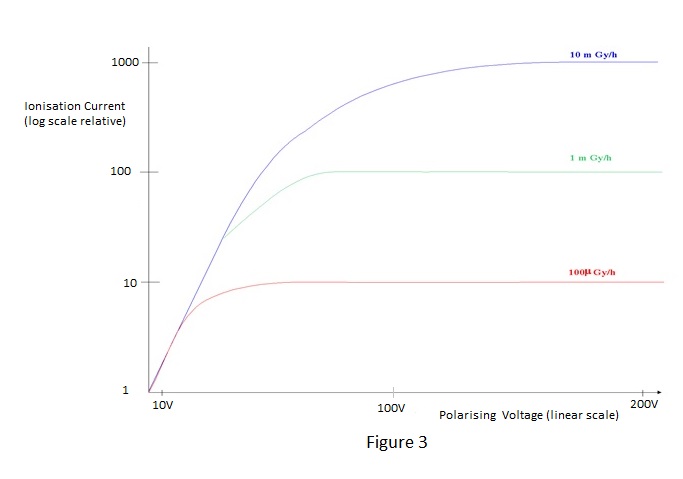

| The total current flow is constituted by a flow of electrons to the positive electrode and a flow of positive ions to the negative electrode. The applied voltage is known as the 'polarising voltage'. Because the ions are very slow moving relative to electrons under the influence of an electric field, recombination will occur unless the field, and therefore the polarising voltage, is sufficiently high to prevent it. Also, the presence of intense ionisation has the effect of reducing the field and consequently a much higher voltage is required for ion collection at high dose-rates than for low ones. Complete collection is known as 'saturation' and the aim is usually to achieve 95% saturation for the highest dose-rate to be measured. Too high a field will give the electrons sufficient kinetic energy to produce ionisation themselves and to produce the effect known as 'gas amplification'. Gas amplification is employed in proportional and Geiger counters, which are outside the scope of this note, and is unlikely to be a problem for practical ionisation chambers. An example of typical saturation characteristics for varying dose-rates is shown in Figure 3. | ||||||||||

|

||||||||||

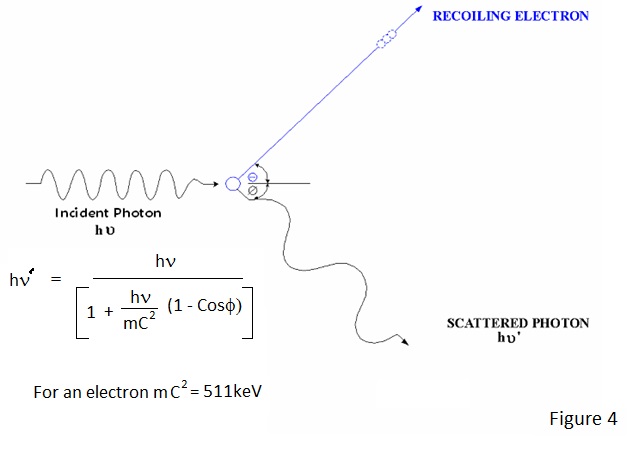

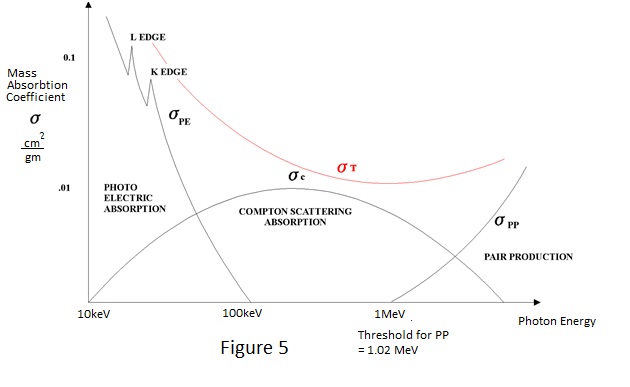

HOW IONISATION OCCURS By now the author may have given the impression that radiation interacts with matter losing kinetic energy in a series of approximately 15 eV decrements. This is far from the actual situation and, if it were true, the energy loss would be minimal. At photon energies normally of interest in radiological protection instrumentation, interactions are initially at much higher energy levels because the radiation interacts with electrons in orbits close to the nucleus or with the nucleus itself and the final level of ionisation is the result of many cascading interactions. As a result of these higher energy interactions most of the ionisation is due to energetic electrons which lose their kinetic energy more rapidly than photons. Photoelectric interaction occurs for low photon energies up to about 150keV. In fact, the ejection of a valence electron at an energy of about 15 eV, as discussed earlier, is a photoelectric interaction. At energies of interest in radiological protection - 30keV and above - electrons are ejected from the inner orbits around the nucleus in a preferential manner. This is best illustrated by an example - To eject an electron from the innermost 'K shell' orbits of lead requires energies in excess of 75keV and to eject one from the next 'L shell' requires about 15keV. Compton Scattering is the primary mode of interaction for gamma ray energies in the region 100keV to 1.5MeV effectively taking over where the photoelectric leaves off. It appears to be the direct result of interaction of an incident photon and any electron and the result is somewhat similar to what might be expected in a collision between electrons. In a Compton interaction an electron recoils and the incident photon is deflected from its path losing energy in the process. The energy and direction of the recoiling electron and the energy and direction of the deflected photon can be equated to the energy of the incident photon as depicted in Figure 5. The Compton scattered photon and electron produce further interactions and ionisation.

Thank you for reading |